Creating A 360 Video Using Maya

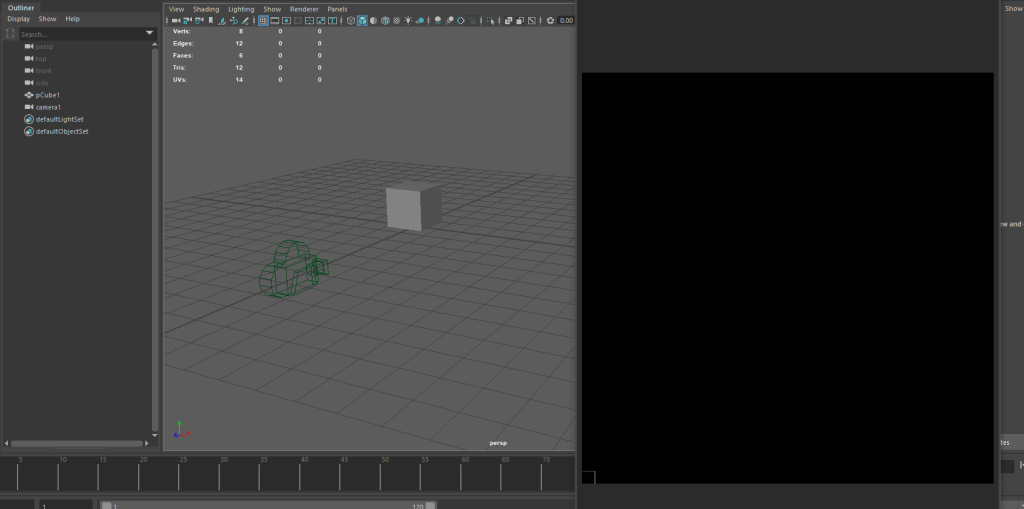

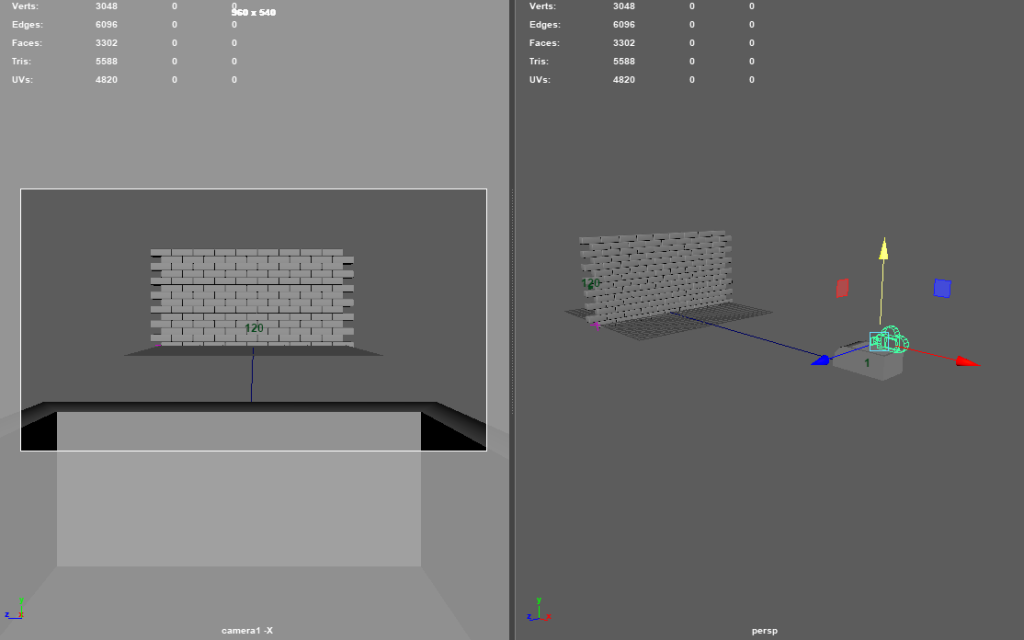

For my first experience creating a 360 scene, I decided to go with something simple – just to learn the basics. After placing a cube and setting up the VR camera correctly, the only thing left was to render it.

Unfortunately, as seen above, a black screen was the only outcome. After researching online, I realised that an Arnold light was required in order for the scene to be visible when rendered.

It had been awhile since I used Maya…

With the Arnold lighting now set up, it was time to wait another 20 minutes for the frames to render.

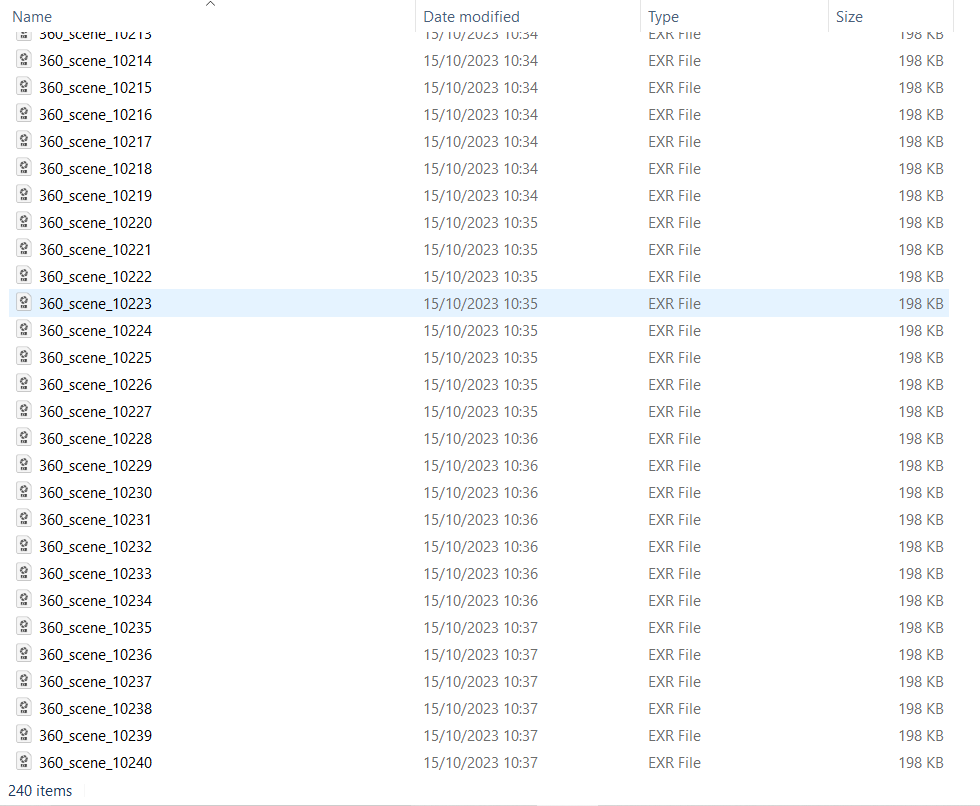

One drawback of using Maya to create and render 360 videos is that it takes a lot of frames for just a small video. I set my video at 24fps for 10 seconds and so 240 frames had to be rendered. As mentioned previously this only took roughly 20 minutes, however, for my production piece (minimum duration of 3 minutes), the amount of frames and time will increase massively.

This is something I will keep in mind when deciding what medium and software to use for my production piece.

After rendering all 240 frames, I imported them into Premiere Pro and uploaded the video on to YouTube.

The final product turned out fine as my main goal from this exercise was to understand how 360 videos are rendered and uploaded to YouTube. If I decided to use this method for my production piece I would increase the resolution slightly as YouTube tends to compress the video which makes it look worse. Of course, this would increase the render time which is something to contemplate.

Maya MASH Network

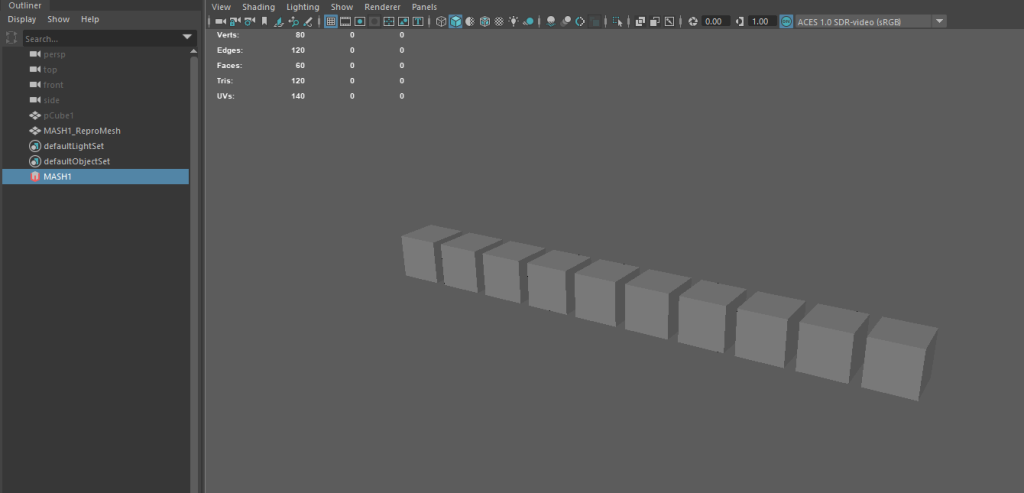

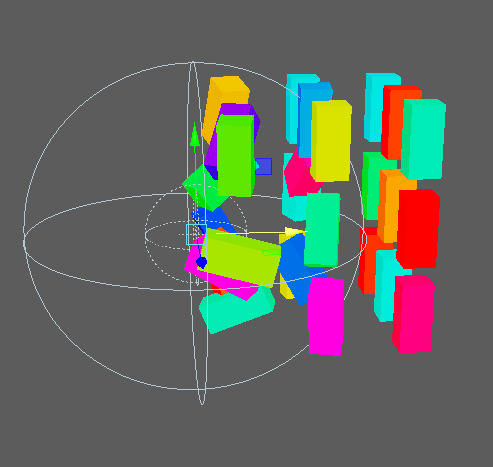

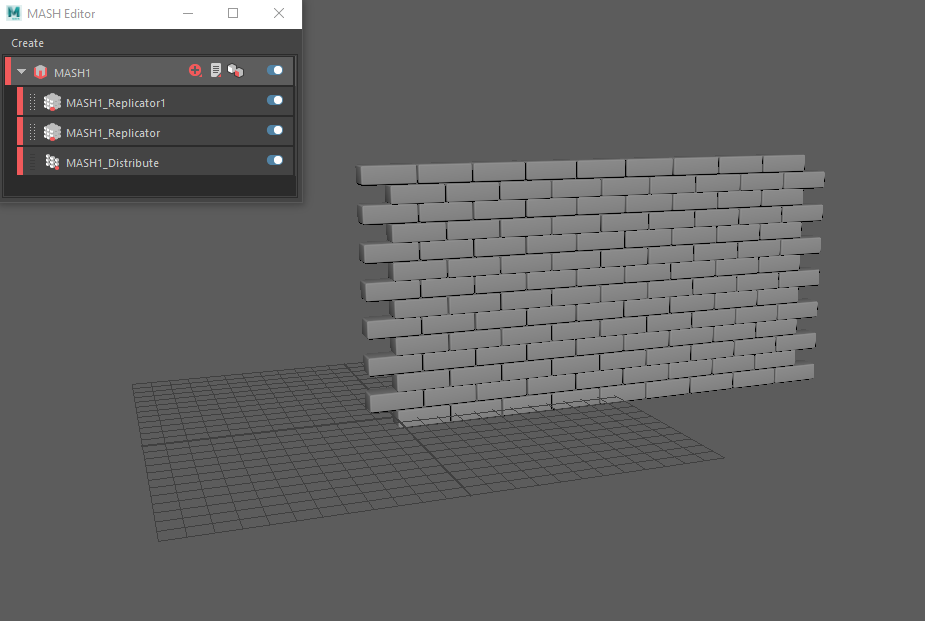

MASH in Maya can be utilised to create a variety of different effects that would be otherwise impossible.

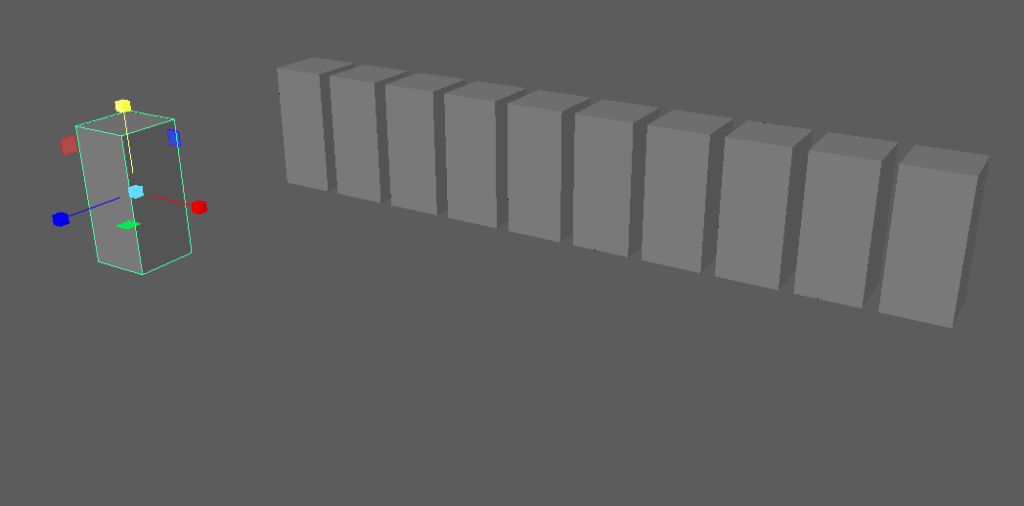

A huge benefit of MASH is that by changing the original shape, every clone also changes. This could be very useful when wanting to make changes later on in the process of creating something via MASH, as instead of having to start from scratch, you can just unhide the original and change it.

The nodes are easy to understand and extremally accessible. Just whilst playing around with it, I can already envision how it could be used to create explosions or dust particles.

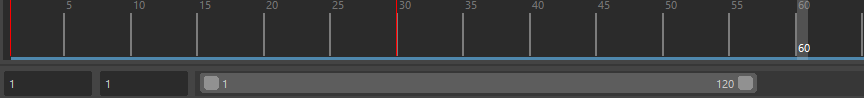

The timeline is simple to use as well – by setting keys. My only concern with MASH is that it may take a lot of computing power to render once you set keys for randomising multiple components such as colour, scale, position etc. Therefore, whilst I would love to use MASH, it is yet to be seen if it is a feasible option for my production piece.

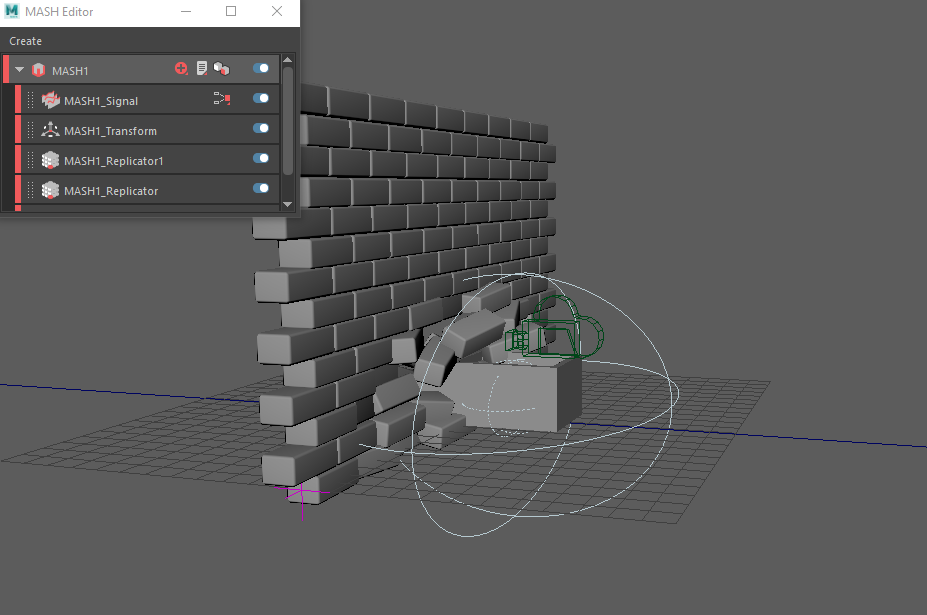

Falloff objects can be used to create nice effects too as the objects are only effected when inside the radius of the sphere.

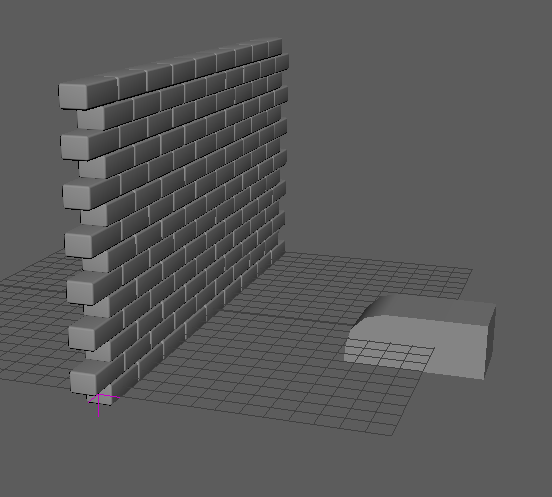

One such effect could be a brick wall being smashed.

By attaching a camera and falloff object to the cart and creating a path for it to follow via the curves tool, I was able to create a first person experience of smashing through a wall.

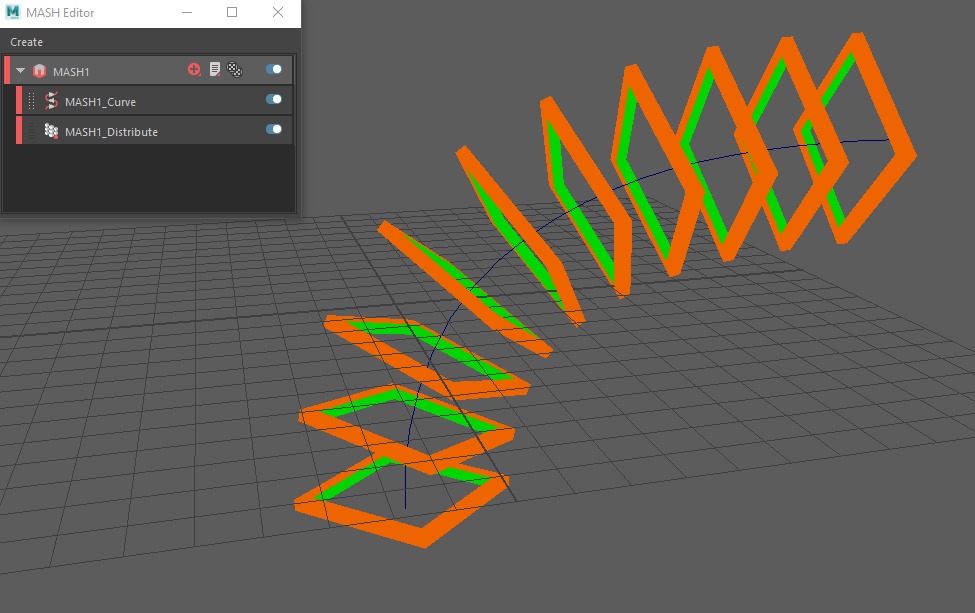

Perhaps the most interesting effect I created using MASH was an abstract tunnel.

By using a curve to plot the direction the shapes would move along, an almost hypnotic effect is able to be created. Whilst this is relatively simple, experimenting with different shapes, curves and speeds could be compelling enough for me to consider doing something similar for my production piece.

Unlike the previous MASH techniques, as this one is so simple (yet effective), I would not have to worry about the computing power necessary to render my piece.

FrameVR

FrameVR is a website that allows for 3D collaboration and creation. Inviting friends or co-workers to your session to create a space together is a seamless and useful feature for team projects.

Being able to upload images directly from Google and 3D models directly from Sketchfab is very practical as within minutes you can build a coherent scene.

By using the built in particle system, as well as a 360 image of space, this scene of a rocket ship on fire was easily created.

Potential Idea:

Whilst FrameVR has some great features such as being able to quickly import models, overall I found it to be too simple to create complex scenes. Compared to Maya and game engines such as Unity and Unreal, what can be created is quite limited.

Instead of creating a complex scene in FrameVR however, it could be used to create a digital portfolio for artists. Having concept art on the walls or the artist’s 3D models placed around may be more effective than the standard 2D portfolio. Allowing the user to walk through almost an art gallery of your work and see your models from any angle would be something interesting to consider for my production piece.

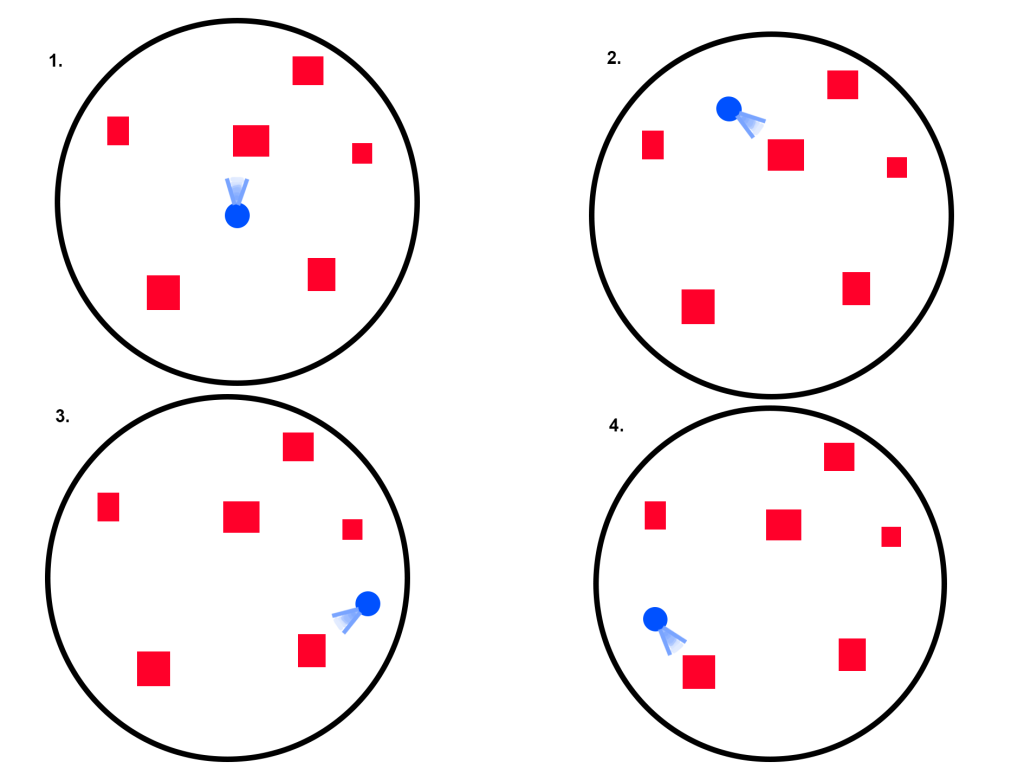

Key: Red = 3D Models, Blue = User

I mocked up a quick concept storyboard for this idea to see if it could work. The space around each model allows the user to view them from any angle they want.

Combining 360 Video & MASH Idea

Whilst completing the abstract tunnel tutorial, I could not help but think that it reminded me of a certain scene from 2001: A Space Odyssey (Kubrick, 1968).

More specifically, the “star gate” sequence towards the end of the film. The bright abstract shapes flowing towards the camera is something that I believe is possible to achieve in Maya via MASH.

Consequently, my first idea for the production piece is a rough recreation of this iconic scene.

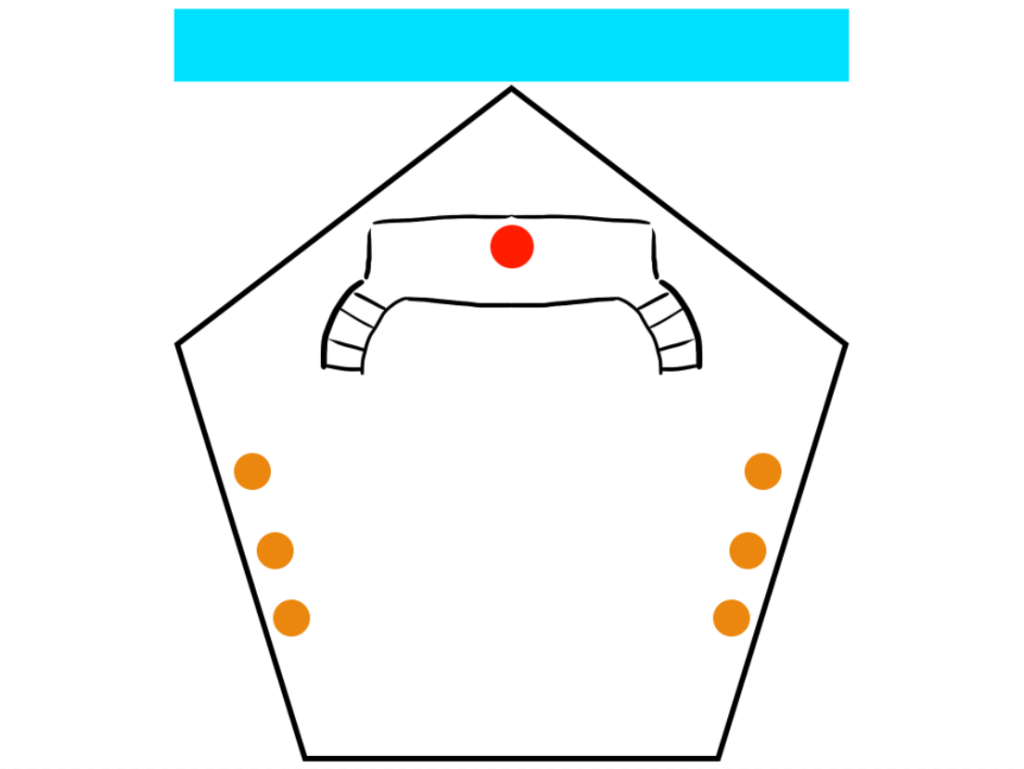

Key : Red = User, Blue = MASH sequence, Orange = Crewmate seats

As this is a 360 experience, I needed to think about the entire environment around the user. Therefore, I quickly sketched a top down view of the spaceship. The idea is that the main attraction is outside of the front window, directly ahead of the user. This is where the tunnel effects, alongside various others, would occur.

Motion Sickness:

Motion sickness in VR “happens when your brain receives conflicting signals about movement in the environment around you” (Thompson, 2023).

There are two main types of motion sickness to consider for this idea.

Technology based motion sickness is caused by the hardware not materialising “the virtual surroundings fast enough to effectively simulate what the eyes would see if the virtual experience was real” (Harmony Studios, 2017). This can be countered by having higher frame rates than usual – even higher than 60 FPS. This may be a problem for this project as having to render over 60 FPS for a 3 minute experience is improbable without a super computer.

The other type of motion sickness is emotion based. It occurs when the body’s sensory organs that make up the vestibular system “become de-synchronised with one another” (Harmony Studios, 2017). This mainly occurs when the user is allowed to move around the environment. There are very “few problems if the visual perspective is stationary” (Harmony Studios, 2017) and so this type of motion sickness should not occur with this idea.

As many as 70% of VR users feel motion sickness (Kim, 2019). In order to avoid adding to that statistic, it is important to consider and avoid these two types of motion sickness.

Eye Strain:

Eye strain is a “common issue” when using a VR headset due to users focusing “on a pixelated screen very close to their eyes” (Nvision, 2020).

Objects and UI that is placed too close to the user will contribute towards eye strain and ultimately ruin their experience. For this project, I will need to make sure the Maya MASH effect is at a safe and comfortable distance from the user via multiple playtests.

One way to limit eye strain is for the user to “take frequent breaks” (Tyrone, 2022). This won’t be as necessary for this project as the experience is only planned to be 3 minutes long – however if it was longer, a message at the start suggesting to take breaks would be a good idea.

References

- 2001: A Space Odyssey (1968) Directed by Stanley Kubrick [DVD]. Metro-Goldwyn-Mayer.

- Escape for adventures (2017) 2001:A Space Odyssey_THE “Star Gate”visual effects_HD [Video]. Available online: https://www.youtube.com/watch?v=1DNbkKBW0K8 [Accessed 22/10/2023].

- Harmony Studios (2017) VR sickness: what it is and how to stop it [Blog post]. Harmony. 14 September. Available online: https://www.harmony.co.uk/vr-motion-sickness/ [Accessed 23/10/2023].

- Kim, M. (2019) Why you feel motion sickness during virtual reality. ABC News, Internet edition. 25 August. Available online: https://abcnews.go.com/Technology/feel-motion-sickness-virtual-reality/story?id=65153805 [Accessed 25/10/2023].

- Nvision (2020) Why VR (& VR headsets) can cause serious eye strain & pain. Nvision, Internet edition. 20 February. Available online: https://www.nvisioncenters.com/education/vr-and-eye-strain/ [Accessed 25/10/2023].

- Thompson, S. (2023) Motion sickness in VR: why it happens and how to minimize it [Blog post]. VirtualSpeech. 27 June. Available online: https://virtualspeech.com/blog/motion-sickness-vr [Accessed 23/10/2023].

- Tyrone (2022) How to reduce eye strain in VR [Blog post]. Serious Virtual Worlds. 13 October. Available online: https://seriousvirtualworlds.net/how-to-reduce-eye-strain-in-vr/ [Accessed 24/10/2023].