Introduction:

This project will focus on Virtual Reality and the unexplored areas of the medium surrounding disabilities. The research previously conducted revealed that there is a lack of affordable options to experience VR for those who are unable to use their arms or hands. Therefore, the aim of this project will be to explore one option, in the form of voice control, to potentially allow these people to explore the world of Virtual Reality through video games.

This method may not be the final solution for those unable to currently use VR, nevertheless, the technology used in this prototype may act as an intermediary before something such as brain controlled VR games can be established at an affordable price on the market. The only equipment required for the prototype to work is a Virtual Reality headset and a microphone, which is more feasible and affordable than the alternative.

To showcase this technology, an escape room VR game will be created, as the mechanics required to complete the game are relatively simple and therefore it acts as a good first test.

Previous Voice Controlled Prototype & Past Examples:

Last year, during the rapid prototype design course, one of the games that I created was voice controlled.

It was a 2D top-down horror game in which the player had to say “up”, “down”, “left” or “right” in order to move towards the exit. The catch being that if they made any noise whilst the heartbeat sound was loud, they died. This prototype never went any further, however it taught me how to use voice commands and sound detection within Unity.

When contemplating a Virtual Reality project that could help people with disabilities, I remembered this prototype. However, instead of voice control being a fun game mechanic, this time it could be used to help more people experience VR by taking away the need for controllers.

Virtual Reality allows for hands free gameplay to be more adventurous, as the camera is controlled by the user moving their head. On a standard computer, the camera is typically controlled using a mouse and so attempting to mimic that with voice controls often leads to a clunky mess. As a result, my previous prototype had a fixed top-down perspective camera.

Other games have made use of voice commands in the past as well. Games such as Phoenix Wright: Ace Attorney, Welcome To The Game and Lifeline have all attempted to use voice recognition with various degrees of success (Howarth, Teressa & Go, 2023).

Ace Attorney (Capcom, 2019) allowed users to shout “Objection” instead of pressing a button. It could be argued that this makes the game more immersive, however it can just as easily be seen as a gimmick.

Whilst playing Welcome To The Game (Reflect Studios, 2016), players have to stay quiet as to not alert the kidnappers. Even though this is potentially an effective way to build tension in a horror game, it is less so voice commands and more microphone detection.

Lifeline (Sony Interactive Entertainment, 2003), on the other hand, attempts to allow the user to control their characters through voice commands. However, whilst the game was ambitious for the PS2 era, the voice recognition often did not work correctly resulting in player frustration.

Conclusion:

Overall, video games in the past have not fully realised the potential of voice commands. They have either been used as gimmicks, such as in Ace Attorney, or were a broken and frustrating mess in the case of Lifeline. The potential is there for voice commands to be more than just a gimmick in the video game world, with voice recognition technology improving massively since the PS2 era. Amazon’s Alexa is proof of this, being able to understand different accents and languages (Wallis, 2022). With Virtual Reality expanding the types of games that can be created to be hands free, the potential for those unable to use their hands still being able to experience video games is getting closer.

Setting Up VR Use With Unity:

Since the headset that I own is a Meta Quest 2, which is designed to be used without the need of a computer, a cable to connect the two was required. After watching a tutorial, I downloaded Oculus desktop and plugged the headset in (GameDevBill, 2020).

Thankfully everything went smoothly as the requirements for Meta Quest Link to work can be quite daunting (Meta, 2023). Now that my VR headset was connected to my PC, it was time to bring Unity into the mix.

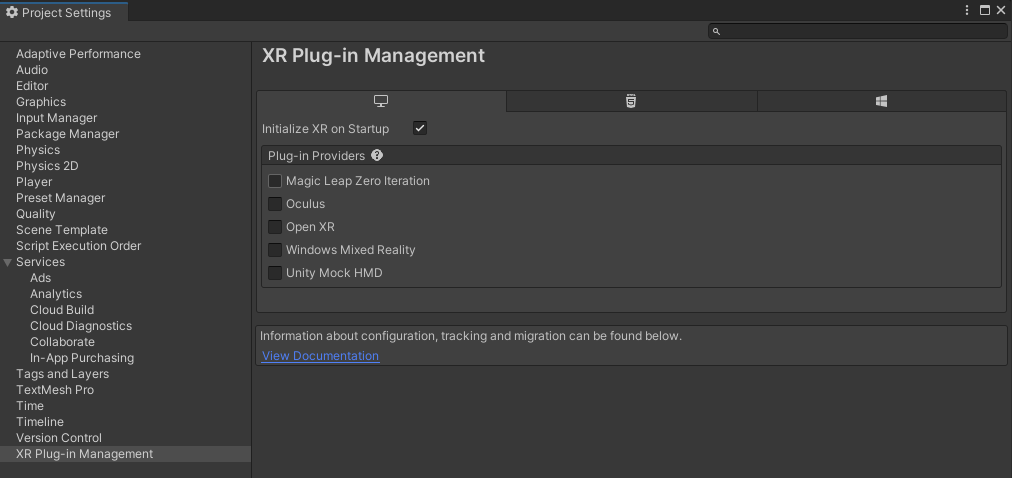

Before Unity would work on the headset however, some project settings had to be changed (Valem Tutorials, 2022).

The XR plug-in management is a Unity plug-in which allows for various VR headsets to be supported with the program. Although there is a specific Oculus option, Open XR was what I chose due to it supporting a large number of VR headsets – useful if this game was ever published.

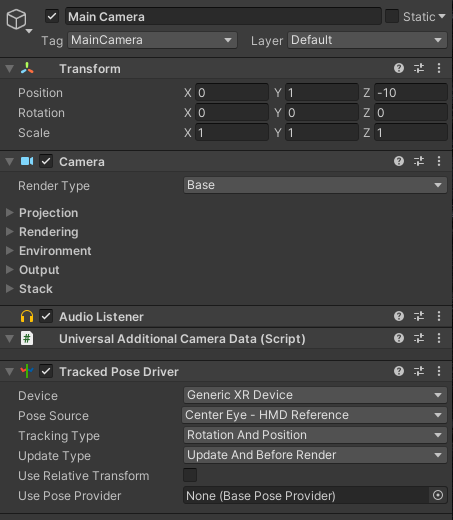

After saving these settings and pressing play, I could see my empty scene in Unity. However, I could not move the camera with my head.

By adding a tracked pose driver to the camera component, head tracking was now enabled automatically. This is built into Unity and is very useful to quickly start a VR scene without any code needed.

After creating a quick scene, I tested the head tracking feature in Unity. A huge positive of Quest Link is that by pressing play in the Unity editor, the game automatically connects to the headset within seconds. This allows for quick iteration and a much more efficient workflow.

The alternative would be having to build the game every time a change needed to be tested and to upload the game to the headset. From previous experience, building a game in Unity can take as long as ten minutes, especially as it gets more and more complex. Therefore, Quest Link is a necessity for quickly developing VR games in Unity.

References:

- Capcom (2019) Pheonix Wright: Ace Attorney Trilogy [Video game]. Available online: https://store.steampowered.com/app/787480/Phoenix_Wright_Ace_Attorney_Trilogy/ [Accessed 25/11/2023].

- GameDevBill (2020) Quest Link in Unity – How to Use Unity’s play mode with Oculus Quest Link [Video]. Available online: https://www.youtube.com/watch?v=sSD798Ov2oY [Accessed 27/11/2023].

- Howarth, K., Teressa, C. M. & Go, S. (2023) 19 Games That Use Voice Recognition. TheGamer, Internet edition. 15 February. Available online: https://www.thegamer.com/games-with-voice-commands-speech-recognition/ [Accessed 25/11/2023].

- Meta (2023) Requirements to use meta quest link. Available online: https://www.meta.com/en-gb/help/quest/articles/headsets-and-accessories/oculus-link/requirements-quest-link/ [Accessed 26/11/2023].

- Reflect Studios (2016) Welcome to the Game [Video game]. Available online: https://store.steampowered.com/app/485380/Welcome_to_the_Game/ [Accessed 25/11/2023].

- Sony Interactive Entertainment (2003) Lifeline [Video game]. Available online: https://en.wikipedia.org/wiki/Lifeline_(video_game) [Accessed 25/11/2023].

- Valem Tutorials (2022) How to Make a VR Game in Unity – PART 1 [Video]. Available online: https://www.youtube.com/watch?v=HhtTtvBF5bI [Accessed 28/11/2023].

- Wallis, J. (2022) The Technology In Amazon Alexa – The Tech Behind Series. Intuji, Internet edition. 1 December. Available online: https://intuji.com/the-tech-behind-amazon-alexa/ [Accessed 26/11/2023].