Setting Up A Reticle:

A reticle is a small dot in the middle of the screen that helps orient players (Polygon, 2019). It also helps communicate information to the player by changing colour or shape (Park, 2016).

Affordance is the properties of an object that make clear its possible uses (Shaver, 2018). Consistent shape and colour language establishes a trust with players and makes them understand – even if it’s subconscious to them (Shaver, 2018).

In this game, the reticle will turn green over anything that is interactable with the player. Over time, the reticle will afford to the player that an object is interactable when the reticle turns from white to green. The consistent nature of this will help the player learn this mechanic.

As well as creating affordance, the reticle will aim to prevent motion sickness – a large issue with the use of VR. It provides something stationary to focus on whilst your character is in motion – reducing the chances of motion sickness (Polygon, 2019).

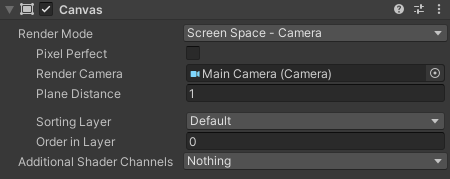

In terms of creating a reticle, it is just a simple UI element in the shape of a square on the Unity Canvas. The Canvas in VR works slightly differently to 2D games however, as there are multiple different render modes. The correct mode for the reticle is the “screen space – camera” mode as the reticle should be stuck to the first-person camera. Other modes allow for UI elements to be separate from the camera and placed in areas around the level – useful, but not for a reticle.

Type Of Movement:

Virtual Reality locomotion is the ability to move from one place to another through the virtual world (Ribeiro, 2021). It is one of the key pillars of a gaming related VR experience, and so once voice recognition is set up – this will be my first focus.

There are various different types of virtual locomotion, each of which was worth exploring in order to find the one most suitable for this project.

Room-Scale Based & Motion-Based:

Room-scale based locomotion involves the player moving within the restriction of their own room size. Typically there is not much travel-based movement involved in these games, such as Beat Saber (Beat Games, 2019).

Motion-Based locomotion requires extra sensors that are not often included with standard VR headsets (Ribeiro, 2021). These sensors track the user’s body movement and accurately displays it in the virtual world. An example of this would be the VR treadmills, which allows the user to walk in place by tracking feet movement (Bond & Nyblom, 2019).

Whilst these options are immersive for the user, both of these methods would not be useful for this project as they require a lot of physical movement. Furthermore, motion-based locomotion requires a lot of expensive equipment, which defeats one of the aims of this project – to create an affordable experience for those unable to use their hands.

Controller Based:

Although this method will not be used for this project, it is still important to explore it to fully understand all methods of virtual locomotion available within VR.

This method involves the player using a joystick, thumbstick or trackpad in order to move their character. Whilst this is a common locomotion technique, it is prone to inducing motion sickness (Bond & Nyblom, 2019). Reducing the player’s field of view whilst moving in VR is one method in which motion sickness can be reduced. This limits what can be noticed by the player, which decreases the triggers for vestibular mismatch – the cause of motion sickness (Ribeiro, 2021).

Teleportation Based:

Teleportation is one of the most common locomotion techniques used in VR games, involving the player pointing to where they want to teleport and instantly moving there (Bond & Nyblom, 2019). Whilst this locomotion technique is easy to use and requires little effort, it makes the game feel less immersive due to humans not being able to teleport in real life (Bond & Nyblom, 2019).

However, this technique does allow players to travel around a map quickly and efficiently with limited movement – making it a promising candidate to use for this project. Typically, the user aims at a teleportation point using the controller in their hands, however, by adding a reticle on the screen the player should be able to aim with just head movement alone.

Suddenly changing the player’s position can make them feel lost and disorientated, and so a blink effect is necessary. A quick fade to black reduces disorientation within players whilst still allowing them to quickly move around the level (Ribeiro, 2021).

Conclusion:

This project aims to create a hands free Virtual Reality experience that is affordable for disabled users. As a result, the best option for locomotion is teleportation based. This method can be adapted to require only head movement from the user, alongside them saying “move” when looking at a teleportation point.

The other three methods, whilst more immersive, require too much physical movement for this project.

Unity Voice Recognition:

Surprisingly, setting up voice commands within Unity was fairly simple whilst following a tutorial (Dapper Dino, 2018).

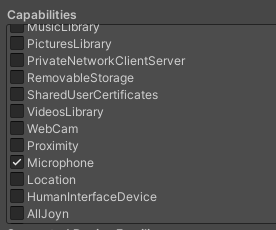

By installing Universal Windows Platform as a platform within Unity, you can enable it to detect the player’s microphone within the Player Settings. The best part being that you do not have to switch your entire project to this build platform for it to work. The Universal Windows Platform also comes with many other capabilities which could be interesting to experiment with in the future, such as accessing the player’s webcam.

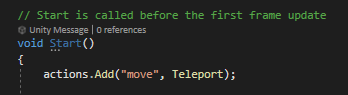

Now that the microphone setting had been enabled, a built in Unity class could be accessed called “KeywordRecognizer”. This allows phrases to be recognised by Unity and for certain actions to occur once they are. For example, when “move” is said by the player, the Teleport function will be called.

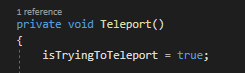

This function then sets “isTryingToTeleport” to true, which in turn teleports the player to the location they are looking at.

The keyword appears in the console when Unity recognises it has been said. This is useful for resolving any issues and bugs, as the program not recognising the phrase would always be my first thought.

Movement Bug Fixes:

The teleportation worked, however, a major issue was that the “isTryingToTeleport” bool was permanently set to true unless the player looked at a teleportation point.

This was a problem because if the player said “move” whilst not looking at a teleportation point and then looked at one 30 seconds later, they would instantly teleport.

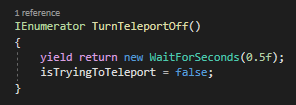

To fix this, a subroutine was created which waits 0.5 seconds for the player to look at the teleportation point after saying “move”. It essentially stops the player from teleporting unless they look directly at a teleportation point and say “move”.

If the player says “move” whilst not looking at a teleportation point, after 0.5 seconds “isTryingToTeleport” is set to false – meaning they will not teleport if they then look at a teleportation point.

The bug fix is demonstrated in this video, where it can be seen that the “move” command is recognised by Unity whilst not looking at the teleportation point, however, when I then looked at it after 0.5 seconds I did not teleport – perfect.

Contrastingly, when looking directly at the teleportation point and commanding “move”, the player teleported to that point.

The feature of the teleportation points spawning back when the player moves off them was also added and showcased in this video. This allows the user to backtrack through the level if they need to.

Conclusion:

It was important choose the correct method of locomotion and get it fully working in a hands free way before blocking out the level or adding any other complex features. Without hands free movement, the game would be pointless and so the first priority was to get this working without any bugs before progressing further.

References:

- Beat Games (2019) Beat Saber [Video game]. Available online: https://store.steampowered.com/app/620980/Beat_Saber/ [Accessed 29/11/2023].

- Bond, D. & Nymlom, M. (2019) Evaluation of four different virtual locomotion techniques in an interactive environment. BS thesis. Blekinge Institute of Technology. Available online: https://www.diva-portal.org/smash/get/diva2:1334294/FULLTEXT02.pdf [Accessed 02/12/2023].

- Dapper Dino (2018) How to Add Voice Recognition to Your Game – Unity Tutorial [Video]. Available online: https://www.youtube.com/watch?v=29vyEOgsW8s&t=213s [Accessed 05/12/2023].

- Park, M. (2016) The Reticle is the Unsung Hero of Modern Shooter Games. Twinfinite, Internet edition. 21 December. Available online: https://twinfinite.net/ps4/reticle-unsung-hero-modern-shooters/ [Accessed 26/11/2023].

- Polygon (2019) How video games save you from motion sickness [Video]. Available online: https://www.youtube.com/watch?v=XEgkQ2EXGdA&t=227s [Accessed 10/12/2023].

- Ribeiro, A. (2021) VR Locomotion : How to Move in VR Environment [Blog post]. Circuit Stream. 12 January. Available online: https://www.circuitstream.com/blog/vr-locomotion [Accessed 02/12/2023].

- Shaver, D. (2018) Invisible Intuition : Blockmesh and Lighting Tips to Guide Players. Available online: https://davidshaver.net/DShaver_Invisible_Intuition_DirectorsCut.pdf [Accessed 29/11/2023].